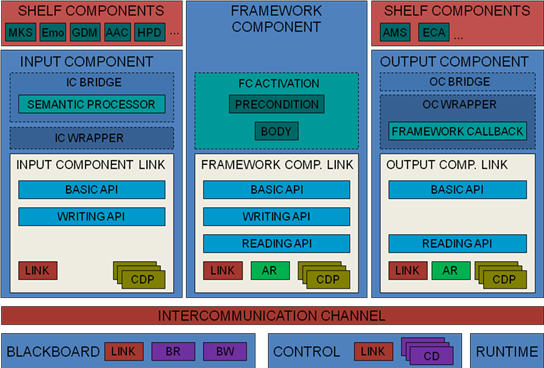

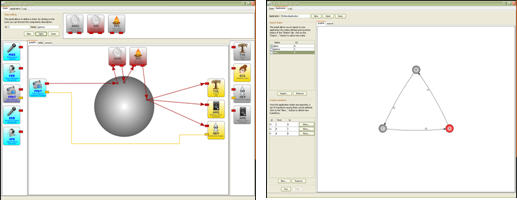

The architecture implements the concept of adaptivity to guarantee independence from the modalities, the devices, the users and the domain/environment, providing the “glue†to easily connect, integrate and apply components. An intuitive graphic interface, the CALLAS Authoring Tool (CAT), supports application designers: components can be imported (from the SHELF or additional ones), associated to a "state" and "transitions", events and actions. The resulting configuration file is sent to the Framework for execution.

Recently released on ![]() , CALLAS Framework and CAT offer easy design and integration patterns to the open community of developers' for exploitation of the project findings.

, CALLAS Framework and CAT offer easy design and integration patterns to the open community of developers' for exploitation of the project findings.

Suggested reading:

- An Open Source Integrated Framework for Rapid Prototyping of Multimodal Affective Applications in Digital Entertainment: Abstract

A 1st Demonstrator was initially provided to tune the respondence of the Framework to the requirements of the Shelf and Showcases. An evaluation survey was launched on this screencast on the C3 to extend the discussion on the Framework conceptual architecture model for functional relationships between the different Shelf components and their semantic fusion and interpretation.